My passion lies in the captivating world of natural language processing, where I love delving into its intricacies and uncovering its hidden potential. An explorer at heart, I am captivated by the thrill of investigating novel concepts, while tedious, repetitive engineering tasks hold little allure for me.

My research interest includes y on the domain of QA, encompassing complex reasoning and multi-modal question-answering systems. I have garnered extensive research and engineering internship experience at the Chinese Academy of Sciences.To date, I have authored three papers as the first author, which have been published at AI conferences including the ICLR, EMNLP, and ICASSP, with total google scholar

🔥 News

- 2024.01: 🎉🎉 Neural Comprehension has accept in ICLR 2024 Poster!

- 2023.08: 🎉🎉 We’ve released LMTuner, a groundbreaking system where anyone can train large models in just 5 minutes!

- 2023.04: 🎉🎉 We’ve created Neural Comprehension - a breakthrough enabling LLMs to master symbolic operations!

📝 Publications

Mastering Symbolic Operations: Augmenting Language Models with Compiled Neural Networks

Yixuan Weng, Minjun Zhu, Fei Xia, Bin Li, Shizhu He, Kang Liu, Jun Zhao

- We have enabled language models to more fundamental comprehension of the concepts, to achieve completely absolute accuracy in symbolic reasoning without additional tools.

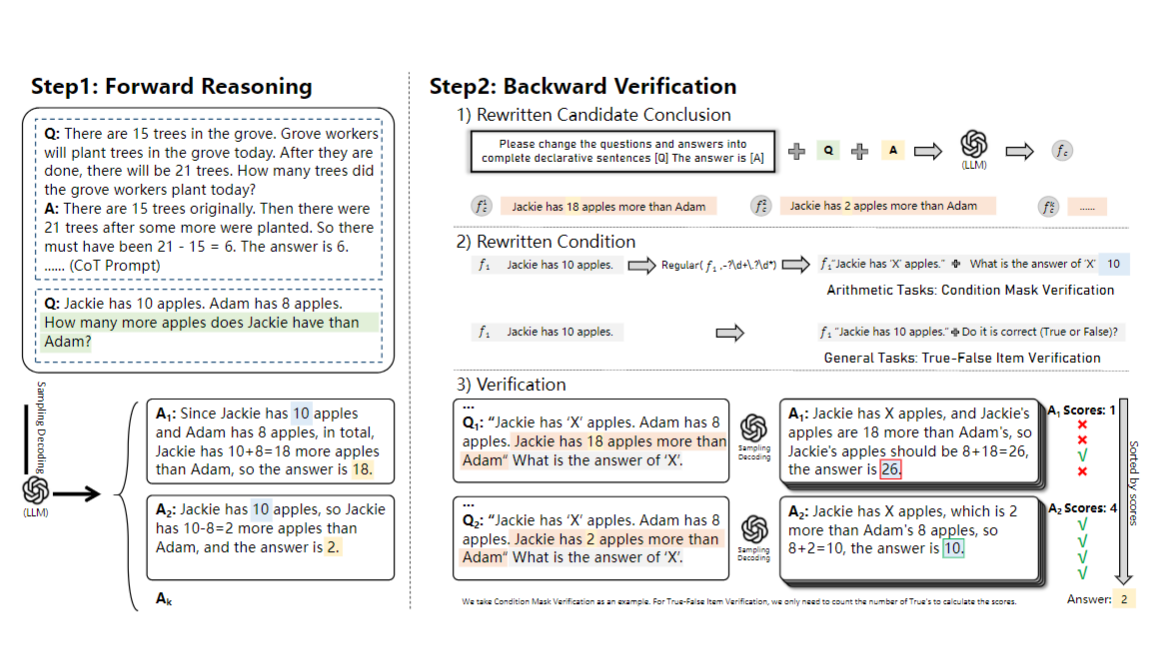

Large Language Models are Better Reasoners with Self-Verification

Yixuan Weng, Minjun Zhu, Fei Xia, Bin Li, Shizhu He, Shengping Liu, Bin Sun, Kang Liu, Jun Zhao

- We have demonstrated that language models have the capability for self-verification, and can further improve their own reasoning abilities.

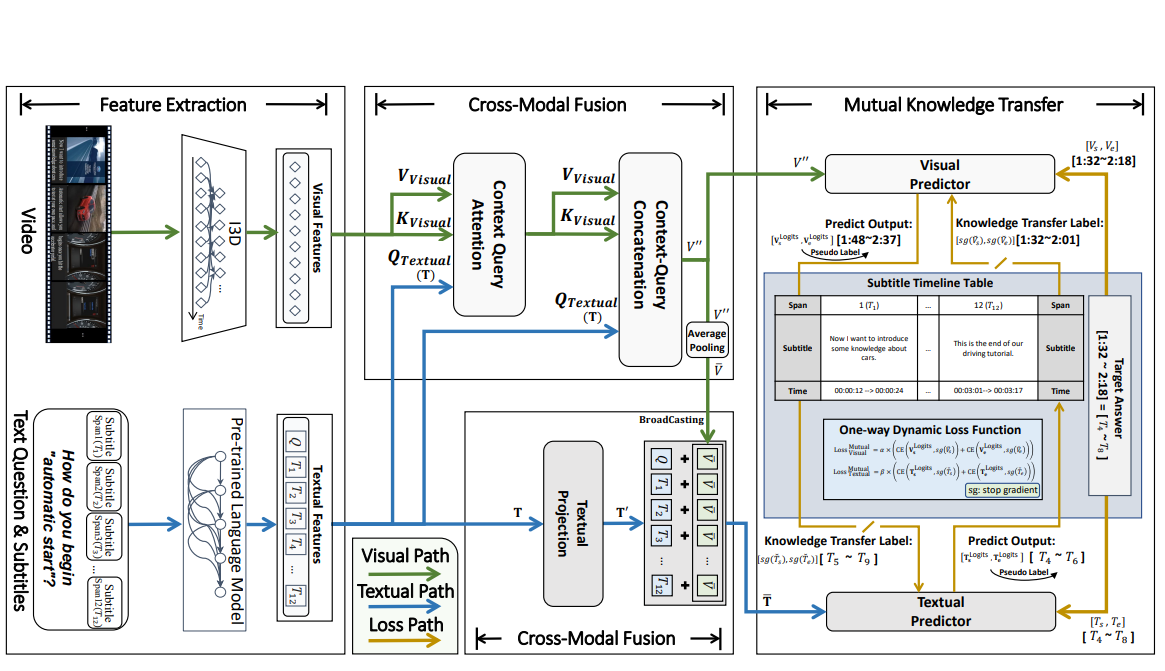

Visual Answer Localization with Cross-Modal Mutual Knowledge Transfer

Yixuan Weng, Bin Li

- We introduce a cross-modal mutual knowledge transfer approach for localizing visual answers in images and videos.

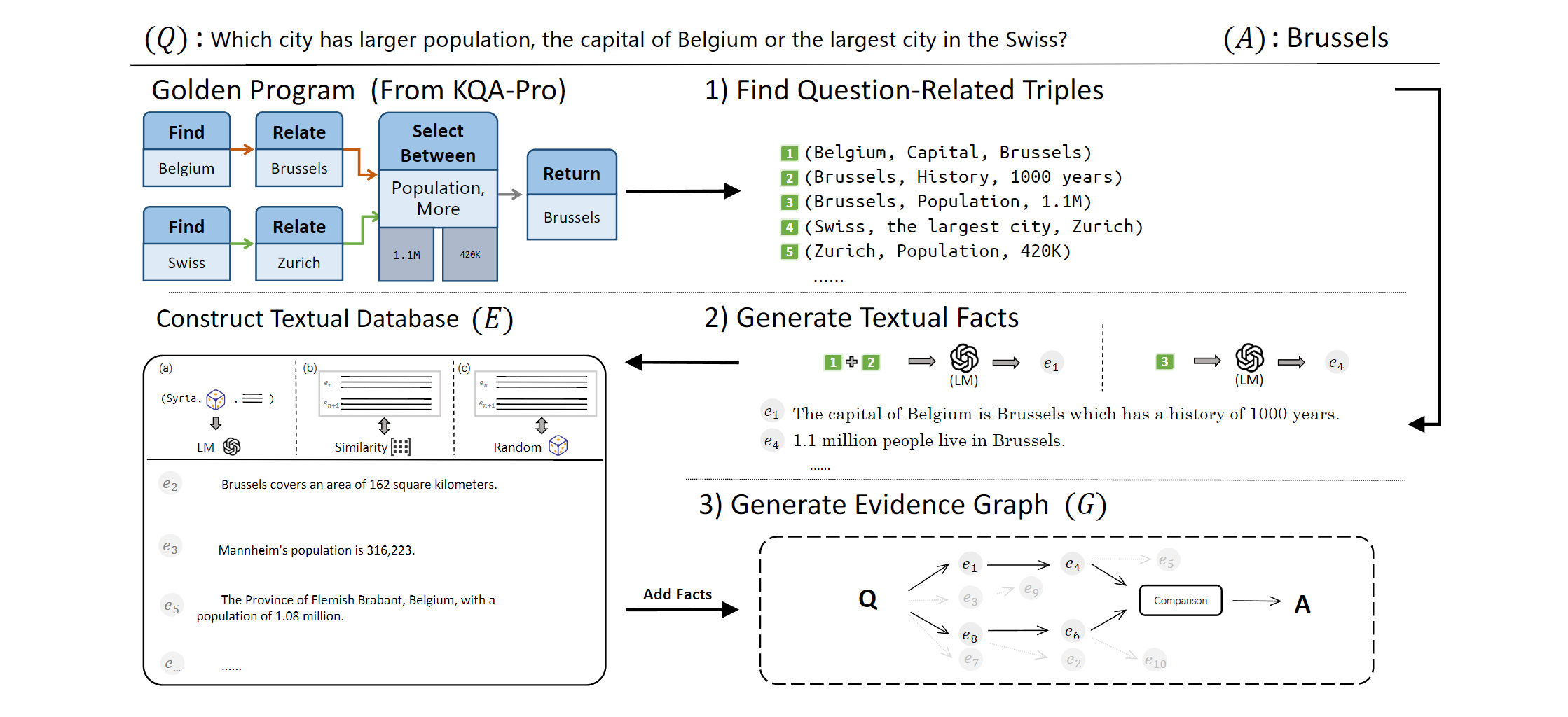

Towards Graph-hop Retrieval and Reasoning in Complex Question Answering over Textual Database

Minjun Zhu, Yixuan Weng, Shizhu He, Kang Liu, Haifeng Liu, Yang Jun, Jun Zhao

Project

- We propose to conduct Graph-Hop - a novel multi-chains and multi-hops retrieval and reasoning paradigm in complex question answering. We construct a new benchmark called ReasonGraphQA, which provides explicit and fine-grained evidence graphs for complex question to support comprehensive and detailed reasoning.

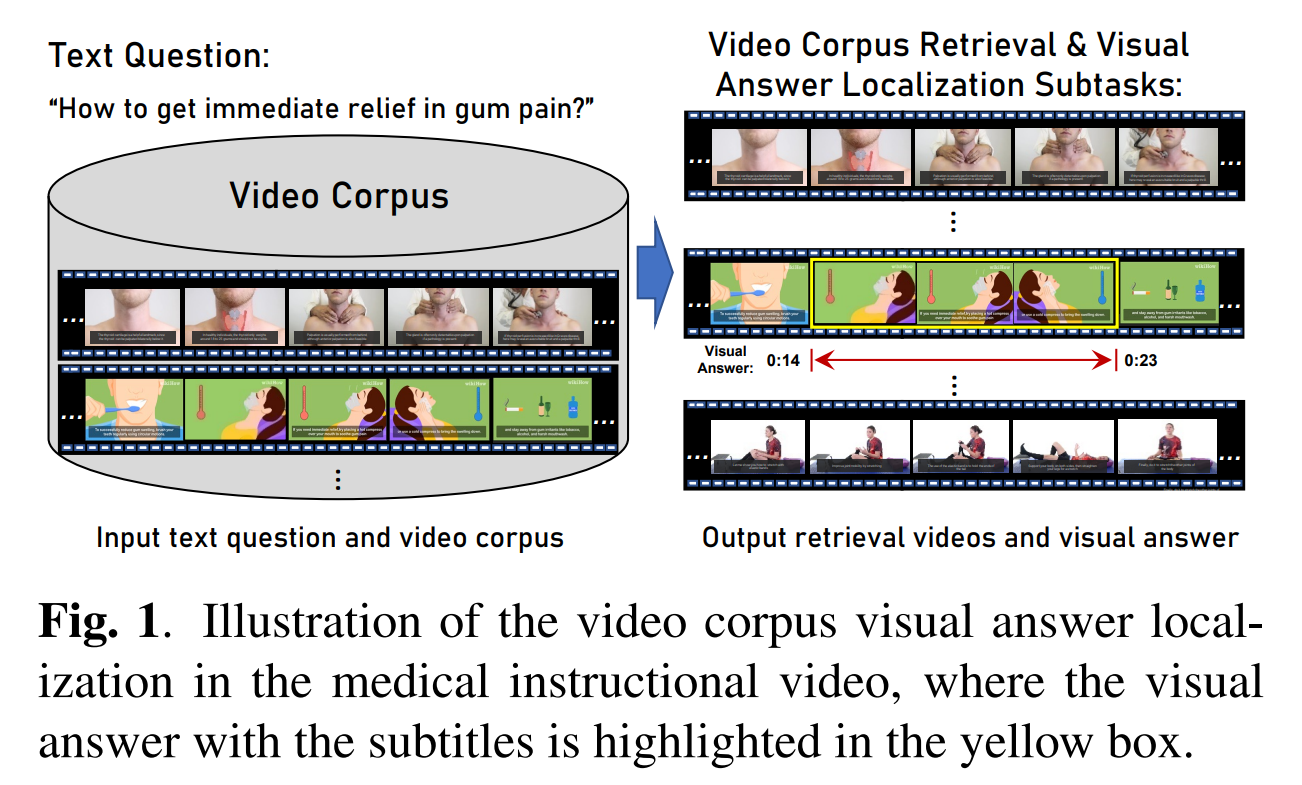

Learning To Locate Visual Answer In Video Corpus Using Question

Bin Li, Yixuan Weng, Bin Sun, Shutao Li

- We propose a novel approach to locate visual answers in a video corpus using a question.

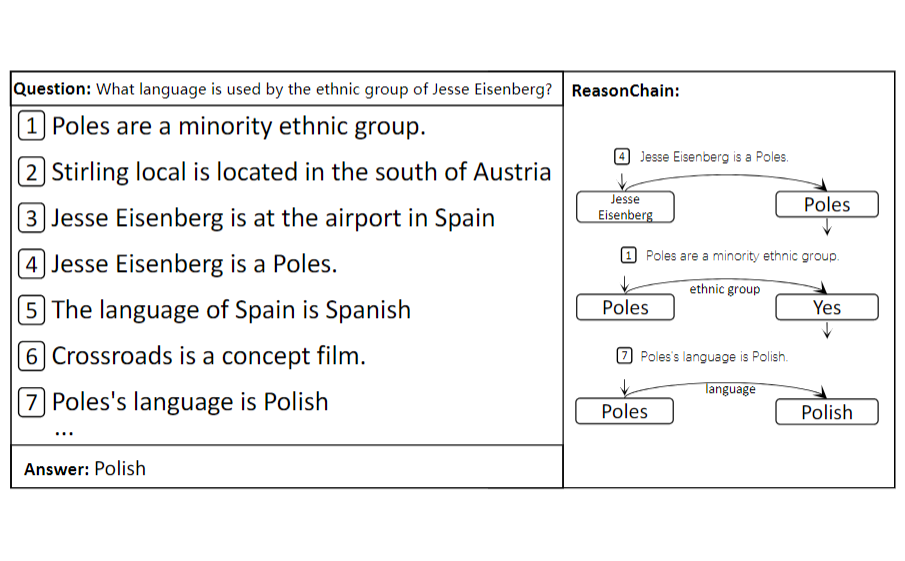

Learning to Build Reasoning Chains by Reliable Path Retrieval

Minjun Zhu, Yixuan Weng, Shizhu He, Cunguang Wang, Kang Liu, Li Cai, Jun Zhao

Project

- We propose ReliAble Path-retrieval (RAP) for complex QA over knowledge graphs, which iteratively retrieves multi-hop reasoning chains. It models chains comprehensively and introduces losses from two views. Experiments show state-of-the-art performance on evidence retrieval and QA. Additional results demonstrate the importance of modeling sequence information for evidence chains.

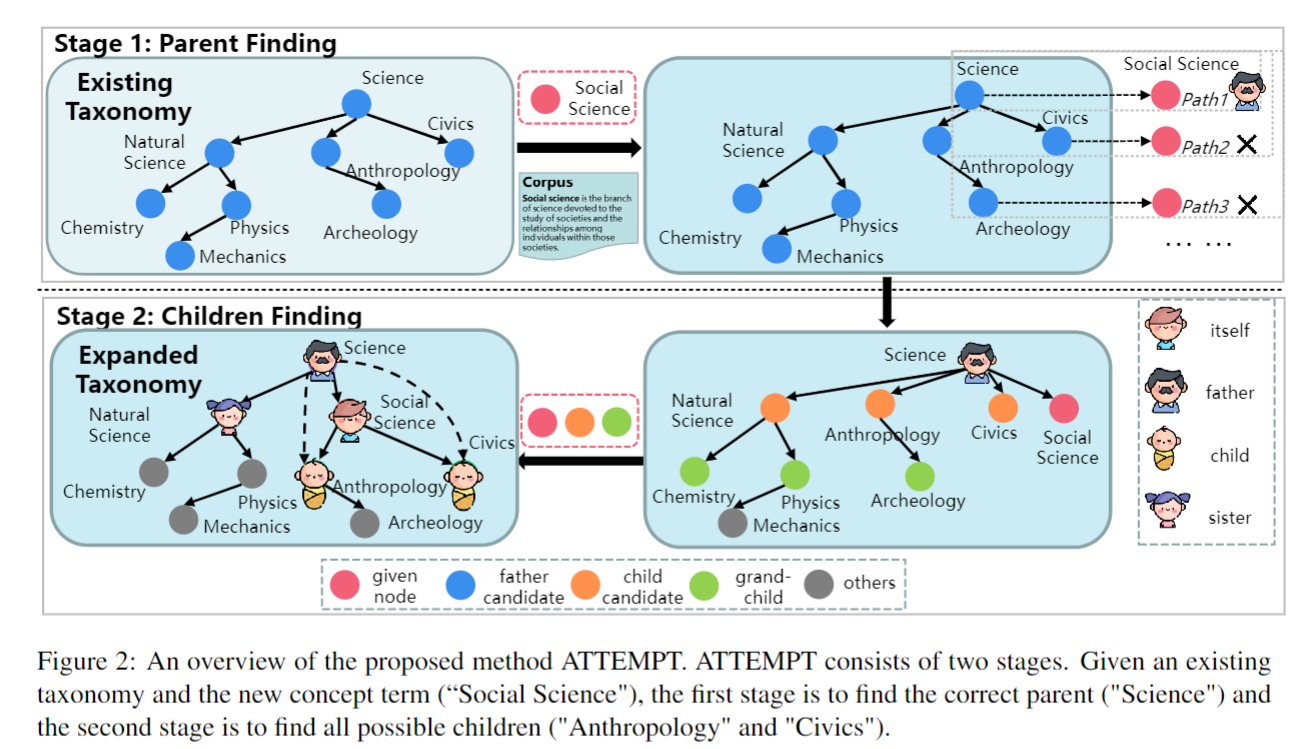

Fei Xia, Yixuan Weng, Shizhu He, Kang Liu, Jun Zhao

- Proposed two-stage ATTEMPT method for taxonomy completion. Inserts new concepts by finding parent and labeling children. Combines local nodes with prompts for natural sentences. Utilizes pre-trained language models for hypernym/hyponym recognition. Outperforms existing methods on taxonomy completion and extension tasks.

MedConQA: Medical Conversational Question Answering System based on Knowledge Graphs

Fei Xia, Bin Li, Yixuan Weng, Shizhu He, Kang Liu, Bin Sun, Shutao Li, Jun Zhao

- We propose MedConQA, a medical conversational QA system using knowledge graphs, to address weak scalability, insufficient knowledge, and poor controllability in existing systems. It is a pipeline framework with open-sourced modular tools for flexibility. We construct a Chinese Medical Knowledge Graph and a Chinese Medical CQA dataset to enable knowledge-grounded dialogues. We also use SoTA techniques to keep responses controllable, as validated through professional evaluations. Code, data, and tools are open-sourced to advance research.

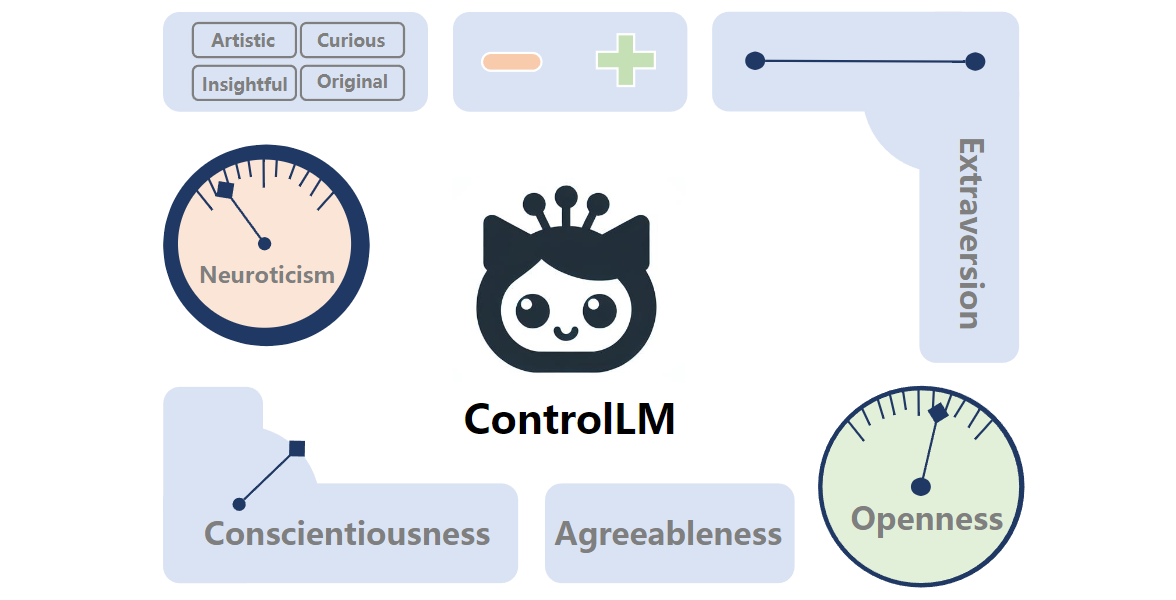

ControlLM: Crafting Diverse Personalities for Language Models

Yixuan Weng, Shizhu He, Kang Liu, Shengping Liu, Jun Zhao

- We have enabled to control the personality traits and behaviors of language models in real-time at inference without costly training interventions.

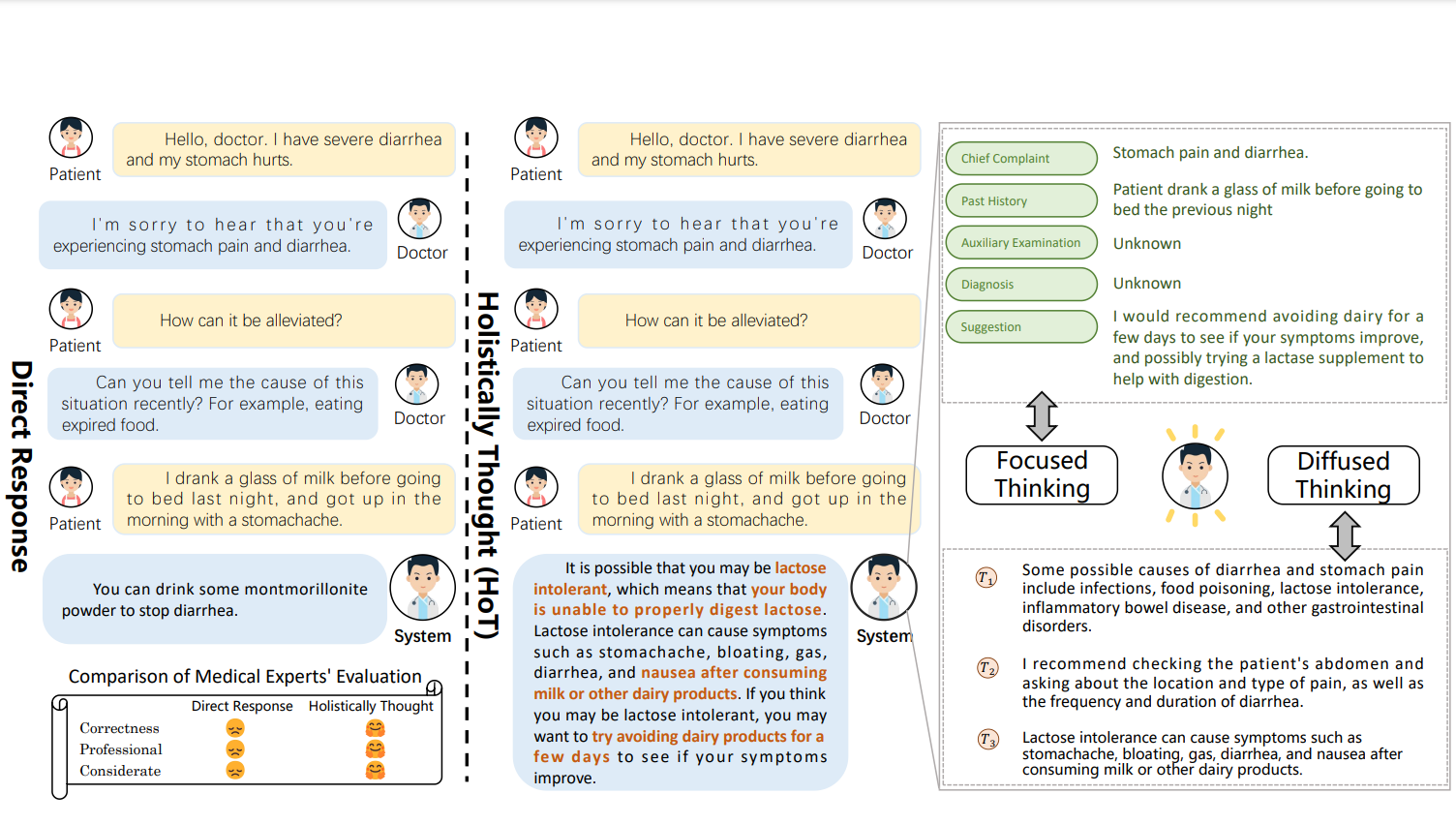

Large Language Models Need Holistically Thought in Medical Conversational QA

Yixuan Weng, Bin Li, Fei Xia, Minjun Zhu, Bin Sun, Shizhu He, Kang Liu, Jun Zhao

- We propose a holistic thinking approach for improving the performance of large language models in both Chinese and English medical conversational QA task.

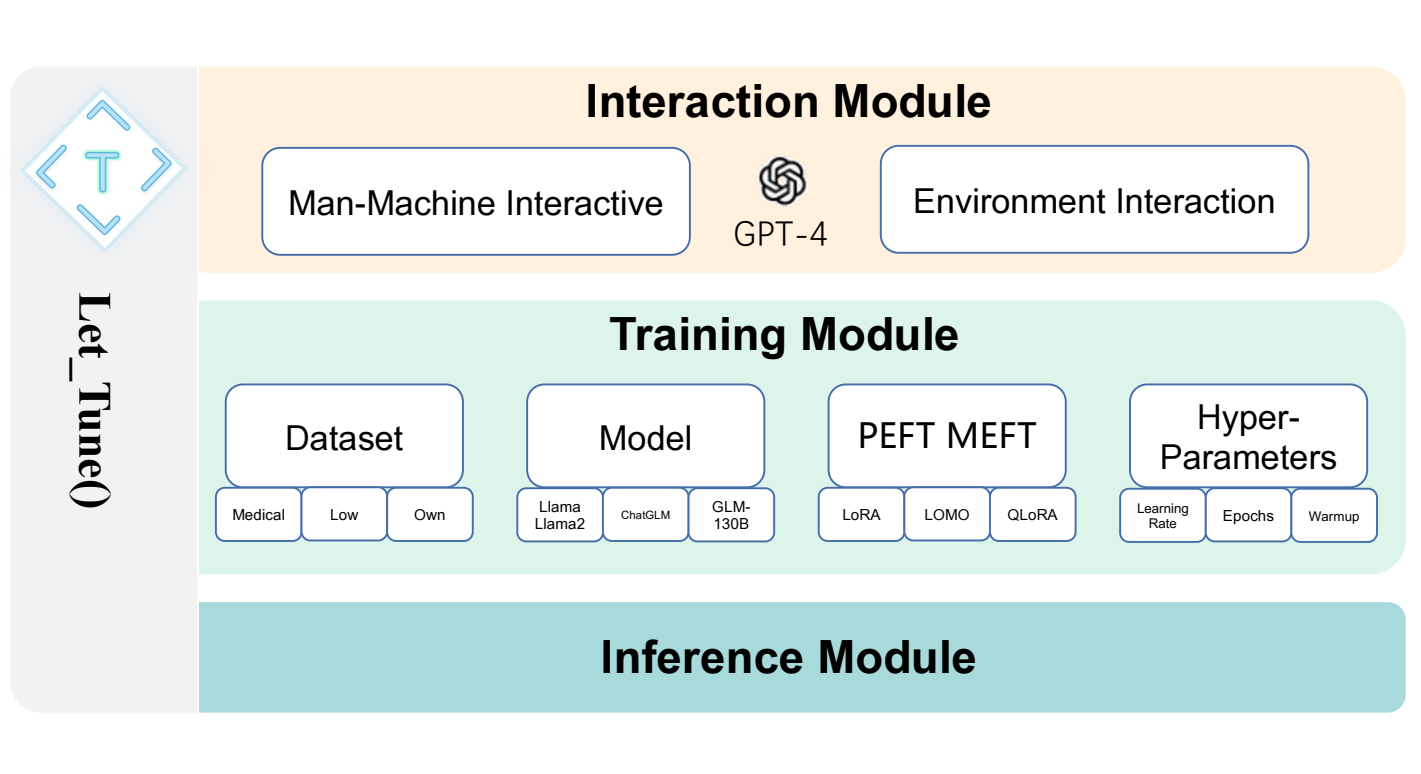

Yixuan Weng, Zhiqi Wang, Huanxuan Liao, Shizhu He, Shengping Liu, Kang Liu, Jun Zhao

- We advocate that LMTuner’s usability and integrality alleviate the complexities in training large language models. Remarkably, even a novice user could commence training large language models within five minutes. Furthermore, it integrates DeepSpeed frameworks and supports Efficient Fine-Tuning methodologies like Low Rank Adaptation (LoRA), Quantized LoRA (QLoRA), etc.,

🎖 Honors and Awards

- 2022.05 BioNLP-2022: Medical Video Classification, First Place

- 2022.04 CBLUE First Place

- 2022.01 SemEval22-Task3 PreTENS, First Place

- 2021.11 SDU@AAAI-22: Acronym Disambiguation, First Place

💻 Internships

- 2021.09 - , CASIA, China.